I am a second-year PhD student at Imperial College London, advised by Prof. Wayne Luk and Dr. Hongxiang Fan. I hold the President’s PhD Scholarship and previously completed both my Bachelor’s and Master’s degrees here. For more details, please see my CV.

My research focuses on democratizing AI through efficient ML algorithms (e.g., LLM quantization, speculative decoding, model merging, expert skipping) and systems (e.g., memory management, request scheduling, heterogeneous computing).

🔥 News

- 2025.11: 🎉🎉 FastTTS, our work on fast test-time scaling for edge devices, was accepted to ASPLOS 2026!

- 2025.09: 🎉🎉 Our paper on optimal verification granularity for test-time scaling was accepted to NeurIPS 2025!

- 2025.08: 🎉🎉 PPD, our edge-friendly speculative decoding method, was accepted to EMNLP 2025!

- 2025.06: 🎉🎉 FW-Merging, our work on scalable multi-LLM/agent merging, was accepted to ICCV 2025!

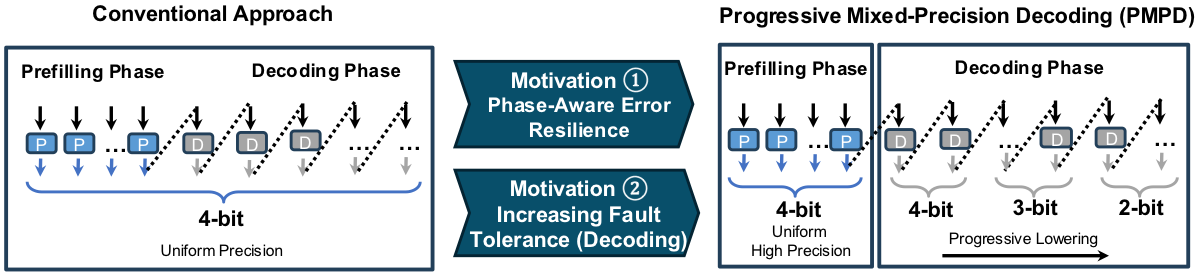

- 2025.01: 🎉🎉 PMPD, which proposes adaptive LLM quantization, was accepted to ICLR 2025!

- 2024.10: 😊😊 Started my PhD at Imperial College London with the President’s PhD Scholarship!

📝 Selected Publications

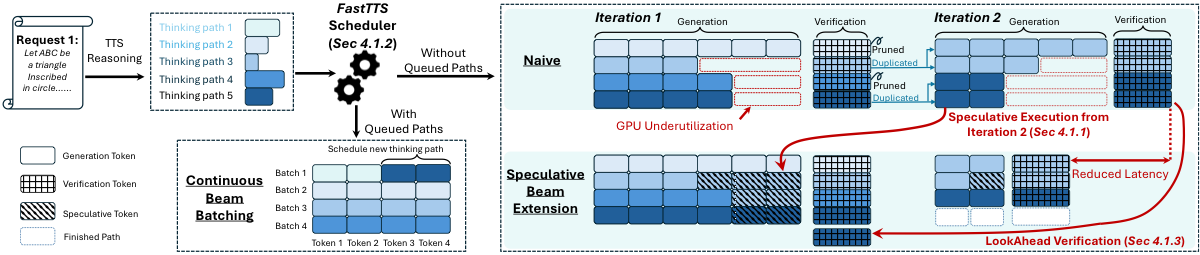

Democratizing Agentic AI with Fast Test-Time Scaling on the Edge

Hao Mark Chen, Zhiwen Mo, Guanxi Lu, Shuang Liang, Lingxiao Ma, Wayne Luk, Hongxiang Fan

ASPLOS 2026

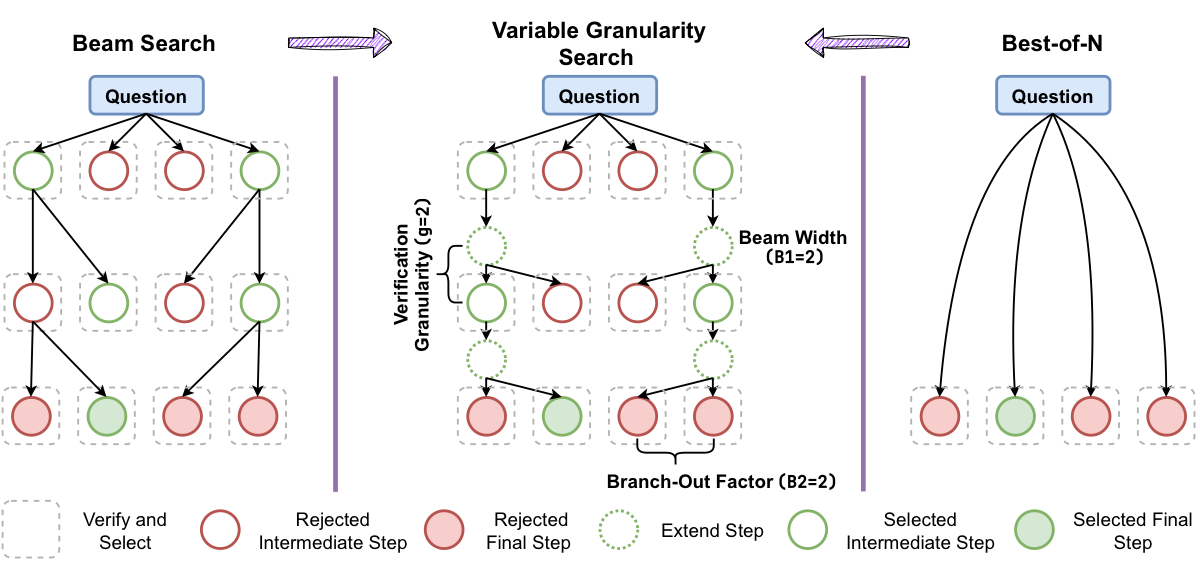

Rethinking Optimal Verification Granularity for Compute-Efficient Test-Time Scaling

Hao Mark Chen, Guanxi Lu, Yasuyuki Okoshi, Zhiwen Mo, Masato Motomura, Hongxiang Fan

NeurIPS 2025

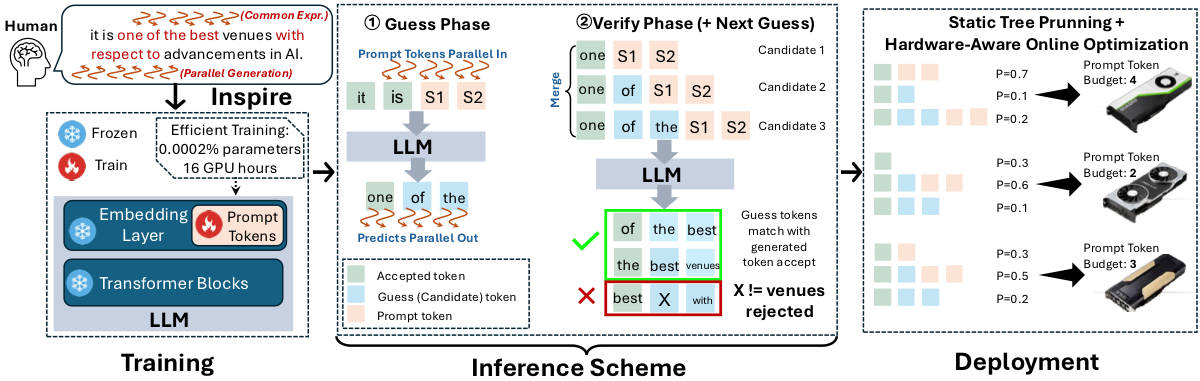

Hardware-Aware Parallel Prompt Decoding for Memory-Efficient Acceleration of LLM Inference

Hao (Mark) Chen, Wayne Luk, Ka Fai Cedric Yiu, Rui Li, Konstantin Mishchenko, Stylianos I. Venieris, Hongxiang Fan

EMNLP 2025

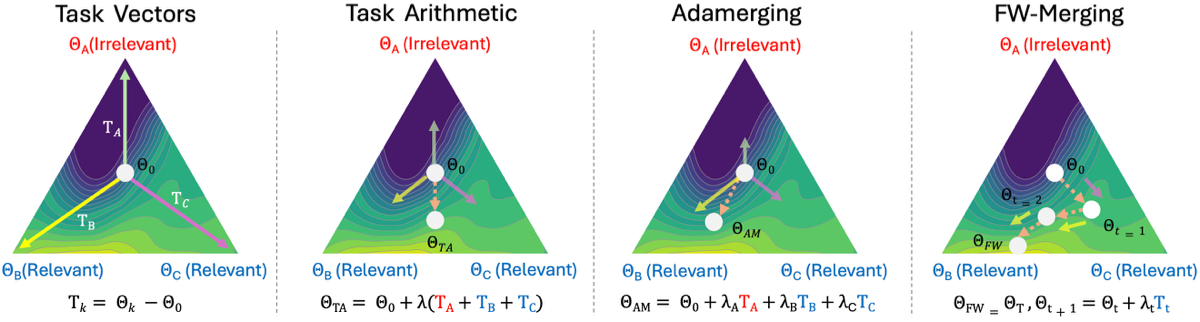

FW-Merging: Scaling Model Merging with Frank-Wolfe Optimization

Hao Mark Chen, Shell Xu Hu, Wayne Luk, Timothy Hospedales, Hongxiang Fan

ICCV 2025

Progressive Mixed-Precision Decoding for Efficient LLM Inference

Hao Mark Chen, Fuwen Tan, Alexandros Kouris, Royson Lee, Hongxiang Fan, Stylianos I. Venieris

ICLR 2025

📚 Full list of publications available on Google Scholar

🎖 Honors and Awards

- 2024 President’s PhD Scholarship, Imperial College London

- 2024 Governors’ MEng Prize in Computing (best overall performance in MEng programme), Imperial College London

- 2024 Winton Capital Applied Undergraduate Project Computing Prize, Imperial College London

- 2024 Dean’s List (Year 4), Imperial College London

- 2023 Dean’s List (Year 3), Imperial College London

- 2022 Dean’s List (Year 2), Imperial College London

- 2021 Dean’s List (Year 1), Imperial College London

- 2021 G-Research Ltd Prize, G-Research Ltd

- 2015 - 2019 Singapore SM1 Scholarship, Ministry of Education Singapore

📖 Educations

- 2024 - 2028 (expected), Ph.D in Computer Science, Imperial College London

- 2020 - 2024, MEng in Computing, Imperial College London

💼 Industry Experience

- 2025.07 - 2025.12, Samsung AI Center, Research Intern. Worked on efficient diffusion language model.

- 2024.07 - 2024.12, Samsung AI Center, Research Intern. Worked on efficient LLM inference involving quantization, leading to a publication at ICLR 2025.

- 2023.04 - 2023.09, Qube RT, Quantitative Technologist Intern. Developed C++ performance monitoring system for low-latency trading platform.

- 2022.03 - 2022.09, Huawei Technologies R&D, Graphics Modelling Intern. Worked on automatic GPU API generation.

- 2021.06 - 2021.09, Ampere Computing, Java Software Developer. Developed a log search engine for Jenkins based on Lucene Search.

💻 Academic Service

- Conference Reviewer: ICLR (2025), NeurIPS (2025)

- Journal Reviewer: TMLR

🍞 Teaching Experience

- 2023 - 2024, Personal Math Tutor, Imperial College London